Dual-Cone View Culling for Virtual Reality Applications

~ 6 Minute Read.

I once had this idea that plagued me for quite a while until I implemented it in my bachelor’s thesis: isn’t the human view volume more cone-shaped than frustum-shaped? Also: lenses of virtual reality headsets are round as well and so should be the portion of the image you can see on the screen! 1

With that presumption, wouldn’t it be more acurate and maybe even more efficient to use cones instead of frustum volumes for culling in virtual reality rendering?

I took upon myself the task to find out:

Intersection Math

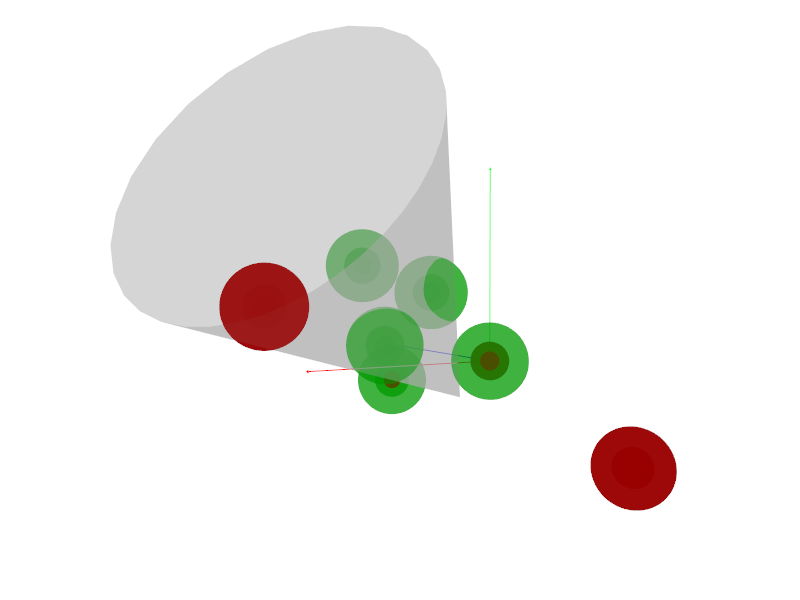

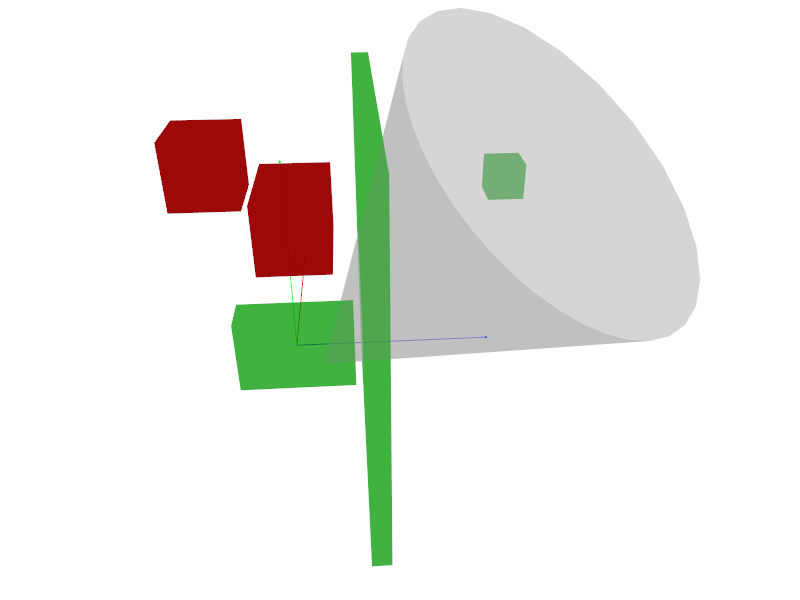

First challenge was to figure out the intersection math for cones and bounding primitives: sphere, axis-aligned bounding boxes and triangles.

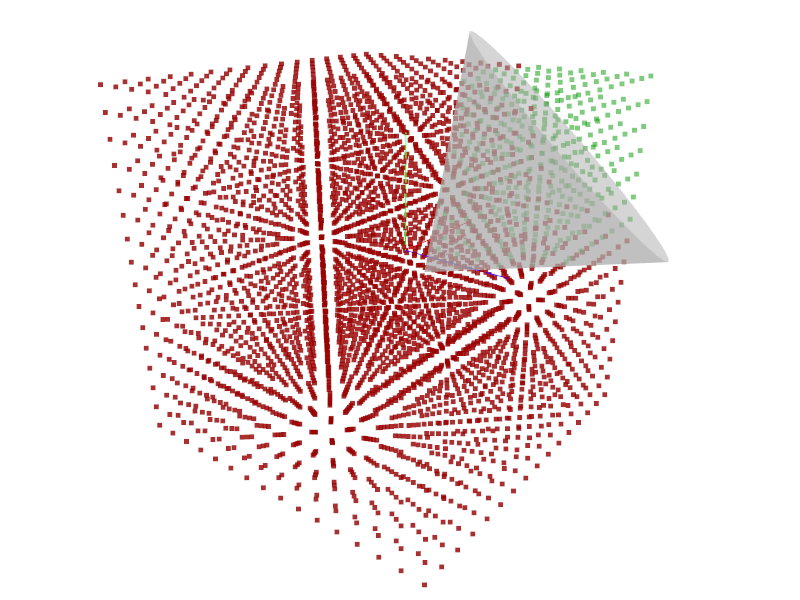

I wrote a small intersection vizualisation tool using Magnum to be able to verify my unittests and nicely visualiz each:

Most of my math code is contributed to Magnum, with the exception of the cone-triangle intersection as I didn’t actually get that to fully work and did not end up needing it as implementing primitive based culling on the GPU was pushed out of scope of the thesis. You can tell from the image that there are still a couple false positives (triangles incorrectly marked as intersecting with the cone). 2

At this point I want to thank the guys of realtimerendering.com for their table of object intersections. I found the papers by Geometric Tools there, which I based my methods on.

Except in respect to cone-aabb which is entirely missing (am I the first who needed that?)— I came up with an entirely own method here, which I will write a separate blog post on tomorrow.

Implementation in Unreal Engine

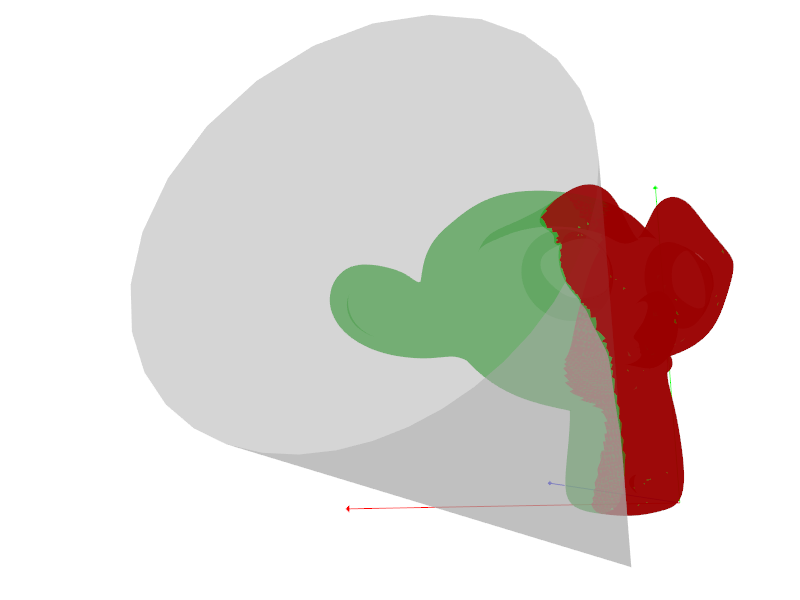

At Vhite Rabbit we use Unreal Engine 4 ever since we switched from an inhouse game engine (which was to slow to develop with sadly). Hence, to be able to use it for our games, should it work, I wanted to real-world proof the new culling approach by implementing it in UE4 and using it with a scene from the Infinity Blade: Grass Lands asset pack they provide for free.

And I’m glad I did! The results I had from benchmarking the individual intersection methods was originally quite a bit more optimistic than they should have been, I found several bugs in them where testing hadn’t been sufficient in edge cases, and overal conclusion of the thesis changed by 180°.

If you accepted the Epic Games eula and have access to the main Unreal Engine repository, you should be able to find my implementation of it here.

Results

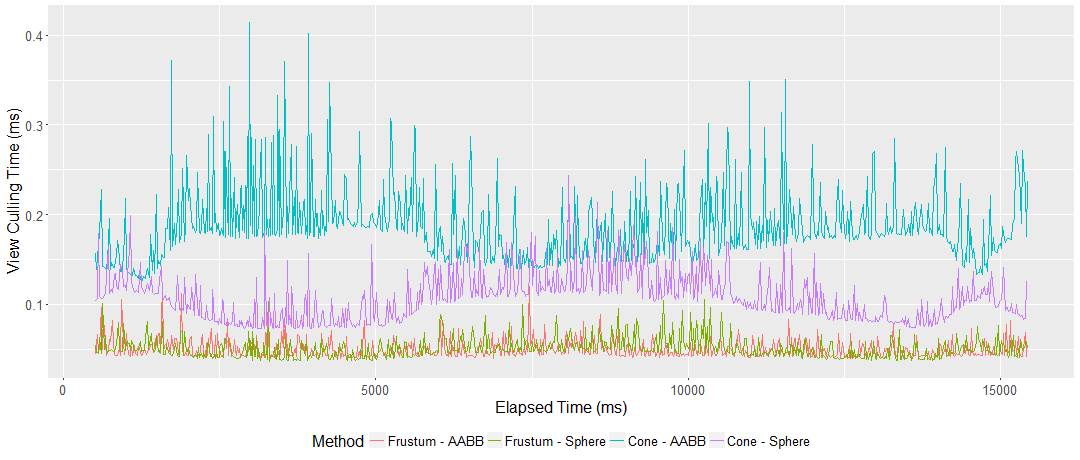

In the end I figured out that I compared with rather naive frustum intersection implementations during benchmarking and that the cone math was not able to hold up against more sophisticated SIMD implementations. On the other hand, my code was not optimized that far either.

The CPU culling I implemented was by a large factor slower. Correctly optimized it should be possible to bring that down, though. More interesting and shocking to me was that—contrary to all expectations—the acuracy was lower than with the classical frustum culling.

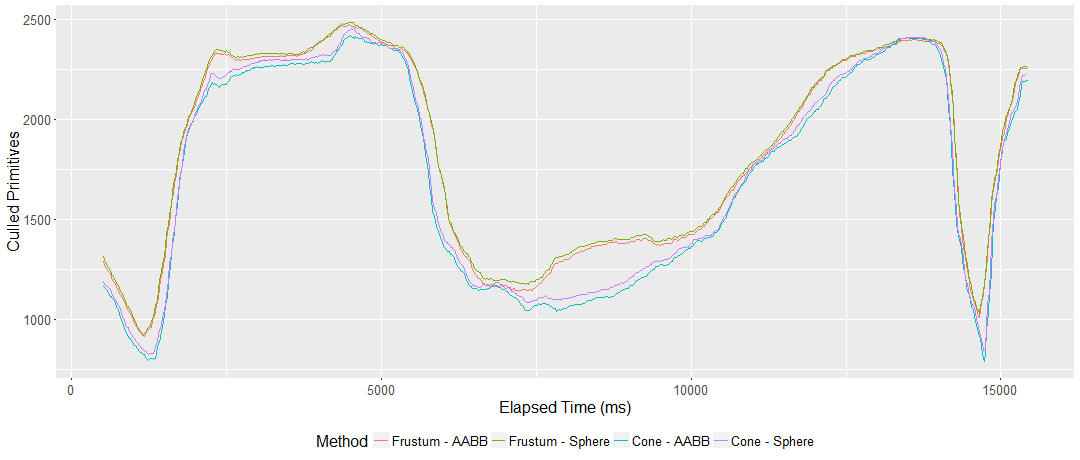

(Camera flythrough, culled primitives over time and frustum cull duration over time with Oculus Rift.)

I was testing on the Oculus Rift mostly where you actually see not only a circular cutout of the rendered image, but the entire distorted image through the lenses. That is likely also the reason why they don’t use a stencil mesh 1 as is done with the HTC Vive. Even on most other headsets (e.g. daydream view) all of the rendered image is visible through the lenses.

The cone therefore fully contained the frustum rather than the other way around and the optimization was pretty much useless…

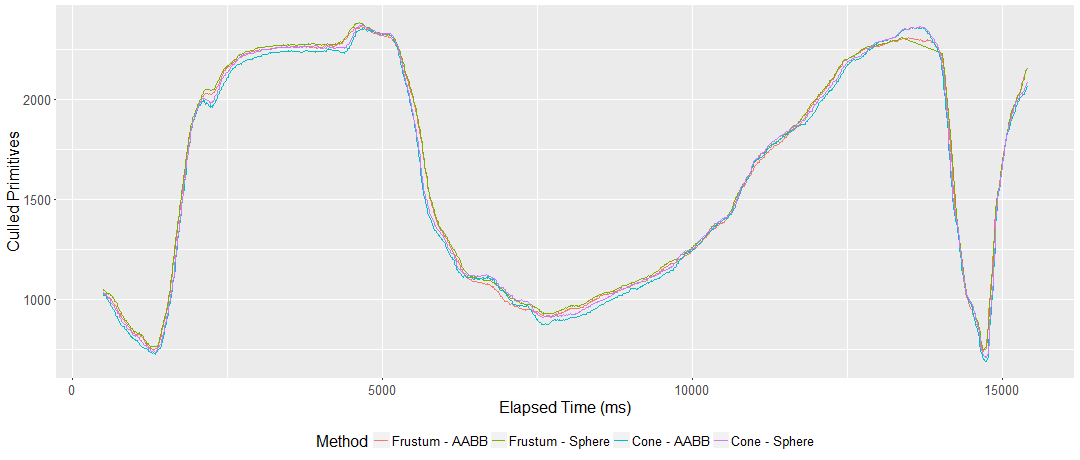

I didn’t give up there actually. For the presentation of my thesis I wanted to check whether this works for the Vive at least: And yes, this worked much better!

(Same camera flythrough, culled primitives over time with HTC Vive.)

While still not better in all cases (probably because the view volume is no fully symmetrical cone with circular base), I at least I didn’t feel like my idea was totally failing its reality check.

Conclusion

My original long-shot hope was to find a view culling solution for VR that is not only more efficient but possibly comparably simple to implement as classical view frustum culling. That aside I still believe that it would be possible to make this more efficient, maybe by using asymmetrical cones and SIMD or leveraging NDCs for primitive culling… either way, this is the point where I cut off the idea.

I hope you enjoyed this post and got something out of this! I for my part learned to check my preconditions more thoroughly before building out implementations of ideas on top of them.

- 1(1,2)

- The idea was originally inspired by the “Advanced VR Rendering” GDC talk by Alex Vlachos.

- 2

- I fixed a couple of these since—the image is not fully up to date—but still not fully correct.

Written in 90 minutes, edited in 15 minutes.